By Anthony Mini, President/CISO

“Around 10% of enterprise-generated data is created and processed outside a traditional centralized data center or cloud. By 2025, Gartner predicts this figure will reach 75%.”

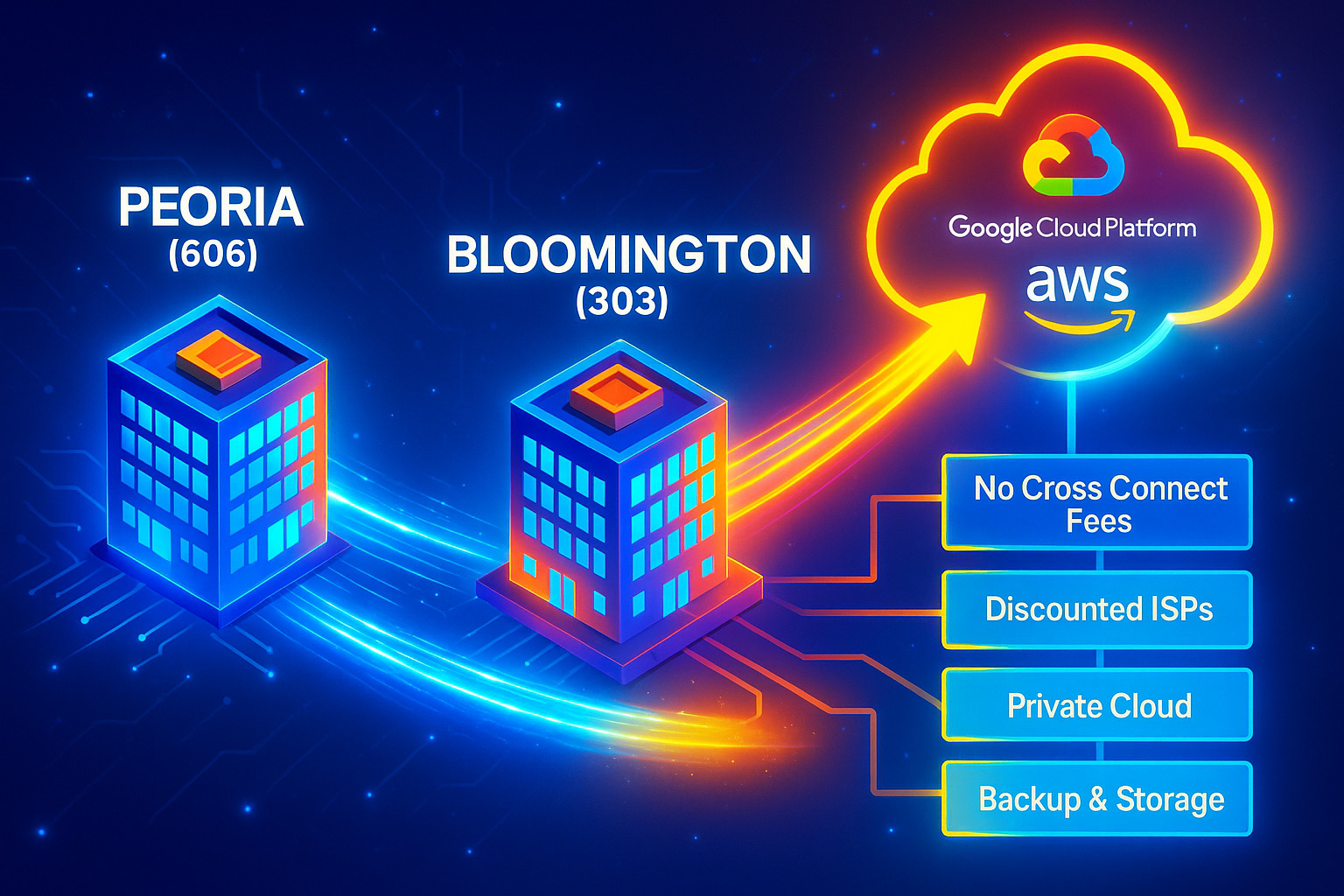

In the world of data centers, the term “edge” is used to describe the various computing resources that are used to host applications and services outside of a centralized core. Edge computing reduces latency and improves scalability, as well as lowers the cost and complexity of managing these resources. I am personally a fan of how Gartner explains edge computing with far edge, near edge, and core.

How is it defined?

Edge computing is a distributed information technology (IT) architecture in which client data is processed at the periphery of the network, as close to the originating source as possible. It is a way of decentralizing the traditional centralized data center model, wherein storage and compute resources are moved away from the main data center and located closer to the source of the data. This allows for data to be processed more quickly and with less reliance on the internet and other external networks.

Why does it matter? | Benefits of Edge Computing

Edge computing is gaining popularity for three main reasons: 1) increased spending on edge technology, 2) the rising number of endpoint devices and data, and 3) the emergence of 5G and 6G networks. As organizations continue to recognize the power of edge computing to reduce latency, enable near-real-time processing, and allow new use cases, spending on edge technology is expected to continue to grow. At the same time, the number of endpoint devices and the data they generate will skyrocket, creating an attractive target for threat actors. Finally, 5G and 6G networks are allowing organizations to leverage the power of edge computing with ultra-low latency and high bandwidth, while telecommunication companies are becoming major players in the space. With the continued growth of edge computing over the next three years, businesses must be prepared to address the security risks associated with this technology.

What’s the goal?

In general, the impact of edge computing is to provide users with more efficient and cost-effective access to applications and services. By decentralizing the computing resources, it is possible to reduce latency, improve scalability, and lower the cost of managing these resources. In addition, edge computing can also be used to provide greater control and flexibility to users, as they can decide where to locate their computing resources.