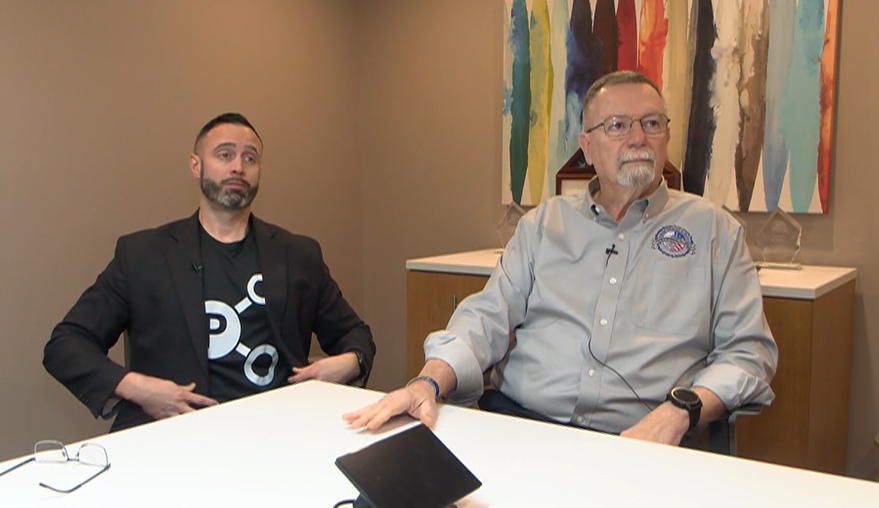

WEEK-TV recently interviewed Pearl Technology’s Anthony Mini and Dave Johnson as part of a story on how scammers are using artificial intelligence… and how you can recognize these scams.

PEORIA (25News Now) – Generative Artificial Intelligence, or AI for short, has been used for advertising, political campaigns, and even pornography. These days, it can also be used by scam artists and con-men.

AI is complicated for people without a computer science background, but it’s basically a powerful computer or set of computers that use existing data to make new pictures, videos, and sounds.

It’s been a hot topic since its internet emergence in the late 2010s.

People have raised all kinds of questions: do we count what it generates as art? Does the data-scraping foundational to these models constitute plagiarism? What do we do about all the power and water it takes to run the data centers?

It’s been used for advertising, political campaigns, and even pornography. It can also be used by scam artists and con-men.

“AI has armed regular, everyday criminals to now be cybersecurity criminals,” remarked Anthony Mini of Pearl Technology in Peoria.

He told 25News that generative AI has made their job of protecting local businesses from cyberattacks much harder.

“Three to five years ago, when there was an incident, we’d look back at the initial email that was the initial compromise,” he explained, “[It was] riddled with red flags. Now, when we look at them, there’s almost zero red flags. You’d have to pay attention to the finer technical details.”

Mini is referring to phishing attacks, which happen when a scammer sends an email posing as someone’s bank, workplace IT department, or other trusted contact. The goal is usually to convince the person to download disguised malware or click on a link that will give the hacker access to sensitive information or a company network.

According to Mini, phishing emails were once easier for people to discern. They’d be vague or feature spelling or grammatical errors. Now, scammers can press a button and have a Large Language Model generate professional-style emails that can be tailored to certain targets.

Additionally, Mini’s partner Dave Johnson said cyber-crooks here and abroad are losing the disadvantage of the language barrier.

“If I don’t speak the language, AI will actually take the email and translate it into the language I want to target,” explained Johnson.

The two are also members of an FBI partnership program called Infragard. Through this program, they’ve also seen more insidious scams while helping the Bureau with local cyber-crimes.

One of them is the “Grandparent Fraud,” which Johnson and Mini told 25News has struck Peoria’s senior community several times now.

Previously, scammers would send a call or text message to convince someone, often a grandparent, that their loved ones were held hostage or in trouble with the law. AI has allowed these crooks to make the scenario more frighteningly real.

“[Scammers] take [a child’s] picture off the internet. [They] record some of their voice because they’ve been on TikTok… and then [they] can combine those two and make them appear to say anything [they] want them to say,” said Johnson.

Armed with the ability to fake their way through phone and video calls, the scammers have an easier time convincing their target to send money for the freedom and safety of their relatives.

“It looks like my grandchild, it sounds like my grandchild, it must be my grandchild” said Johnson simply.

The pair also said it’s a crime that may be under-reported.

“[People] buy into it; they end up getting scammed, and they’re embarrassed, and they really don’t know who to report to,” said Mini.

These scams are really easy to set up. The pair provided 25News with a “deepfake” they made, with permission, of Peoria radio personality Greg Batton endorsing Johnson for president.

They did it in less than an afternoon.

Dave Johnson and Anthony Mini sent us a simple deepfake they made of Peoria radio personality Greg Batton (with his blessing).

“You can appear to be anybody. If you’re targeting a specific population, you would choose somebody that is well-renowned in that group,” Johnson said.

It’s something that’s happened already.

During the open enrollment period in 2023, AI “voiceclone” ads flooded YouTube and social media feeds. Computer-generated impersonations of Dr. Phil, Joe Rogan, Ben Shapiro, and many others urged viewers to follow a link where they could claim a nonexistent healthcare refund.

The same scam re-emerged on Facebook in 2024’s open enrollment period, this time featuring an AI Donald Trump.

What can be done about this AI flimflammery? Some politicians are pointing towards regulation.

“We have to make sure we’re one step ahead of scammers. We have to make sure there’s legislation there that will protect people. [A smartphone] is their way in. Those scammers who are thousands of miles away? This is their way into your life,” said Democratic Illinois Representative Eric Sorensen during a press call in February.

He plans to re-introduce a bill increasing penalties for phone scammers if they use AI.

Similar measures against AI have been introduced over the past several years, but according to an online tracker by policy institute The Brennan Center, many of those bills died in committee or remained there.

That means learning truth from tech falls to everyday Americans.

To learn some of the signs of deepfakes, 25News visited Dmitry Zhdanov at Illinois State University, where he serves as their State Farm Endowed Chair of Cybersecurity. He’s been researching generative AI with his students and has a few tips.

“You can see the lights are on top of the picture, but the soldiers’ faces are lit from the left. It’s inconsistent,” said Zhdanov, motioning to the screen displaying an image MetaAI generated of soldiers standing in a hallway.

“The hands are very poorly defined. Especially the right hand over here: you cannot tell apart the fingers at all,” he added.

That’s the thing with AI; It generates an image, but not the context behind it. Unlike a human artist, it doesn’t visualize things in 3D space using lived experience. It will also make mistakes because it’s effectively making a data collage.

Zhdanov said that’s why looking for background cues, such as nonsensical text, remains a reliable technique.

“When dealing with text, it treats each letter as an image. So as you noticed, it doesn’t always reconstruct the font right,” explained Zhdanov.

It’s like a magic trick; Don’t look where the magician wants the audience to look. Instead, look for gibberish in the background. Look for straps on a bag that don’t connect. Look for hands or fingers coming from nowhere.

When watching videos, such as one he generated using OpenAI’s Sora, Zhdanov said viewers can watch the mistakes pile up.

Take, for example, an AI generated photo of a generic man eating pizza. A few things are noticeable enough to give away the ruse.

“First of all, he’s not biting the pizza, he’s just moving it in and out of his mouth. Second, as we talked about before, look at the posters… ‘Pazza Pizz,’” Zhdanov laughed.

Note how the posters almost say “pizza,” but not quite.

Example of AI-generated imagery(Generated by Dmitry Zhdanov using Sora by OpenAI)

For all this detective work, Zhdanov said it’s no good if people don’t also understand what users posting AI tomfoolery are trying to say or accomplish on social media.

“Do you know or do you realize someone is trying to manipulate you? Usually this comes up when it’s emotional engagement, right? Does it make you mad or something like that?” Zhdanov asked.

“There’s pressure to act. If someone says you need to click in the next ten seconds, they’re trying to trick you, right? A good rule is always to stop, disengage, and think about it,” he elaborated.

That’s the same thing Mini and Johnson recommend when on the other end of a suspicious call or text.

“The bank sends you a text saying, ‘$10,000 has been deposited into your account, click the link.’ Don’t click that link. Instead, get off of there, log on through your app or through the bank’s website and check your account,” advised Johnson.

Same thing goes if a grandkid calls from an unknown number and asks for money: text them or their parents to check if they’re okay.

The big picture here is that AI has given people new tools to get what they want from others, whether that’s clicks on social media, a political response to misinformation, or the money of an unsuspecting victim.

Does your business need help with IT solutions, cybersecurity, data centers, or audiovisual integration? Contact us to see what technology solutions might work best for you.